Luma AI Announces Ray3 Modify, a New Model for Hybrid-AI Workflows for Acting & Performances, Now Available in Dream Machine

December 18, 2025

Ray3 Modify introduces the next-generation hybrid-AI workflow for acting and performance, enabling brands and studios to guide scene evolution with greater predictability, continuity, and intent.

Keyframe Control, Character Reference, and dramatically enhanced Modify Video bring generative AI, human-led performance, and scene editing in AI video.

Now available in Luma AI’s Dream Machine platform, offering powerful video tools aimed at production workflows in film, advertising, and post-production.

Luma AI, the frontier artificial intelligence company building multimodal AGI, today introduced Ray3 Modify - a next-generation workflow that allows real-life actor performances to be enhanced with AI, enabling creative teams to produce Hollywood-quality performances and scenes. Ray3 Modify allows editing recorded footage in incredible new ways and is a visual effect powerhouse rolled into a single model. Available now on Luma AI’s Dream Machine platform, Ray3 Modify addresses one of the fundamental limitations of early AI video systems: their inability to reliably follow and preserve human performance.

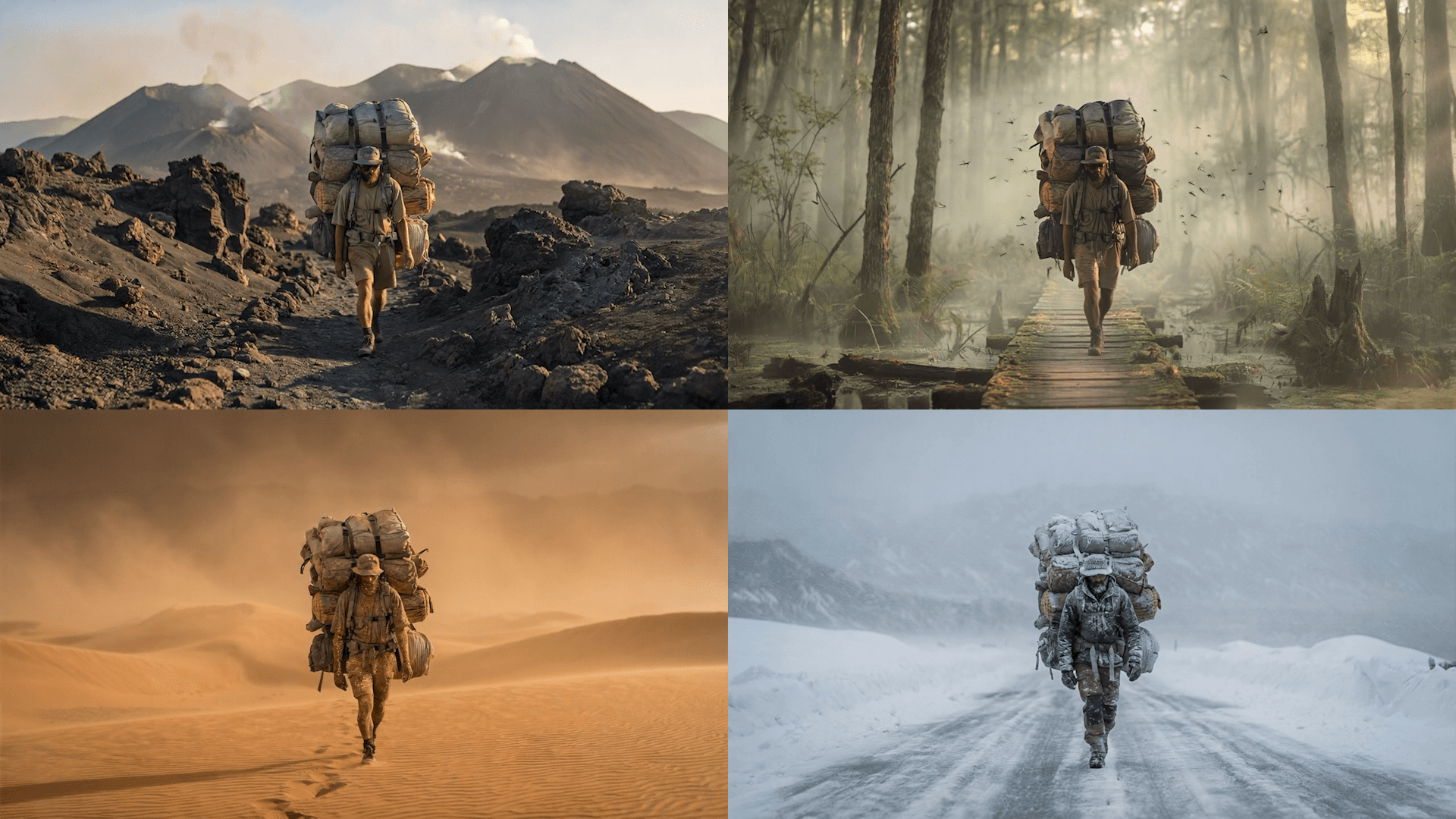

Historically, in AI-generated video, it has been difficult to preserve timing, motion, and emotional intent during acting and scene transformations. Ray3 Modify generates content in direct response to human-led input footage, enabling brands to work with actors to promote products and personalize content while maintaining alignment with the original performance. For filmmakers, this enables directors to film actors in any world, scene, or style.

With Ray3 Modify, the human performer, camera operator, or physical input becomes the source of direction for AI. This enables AI to follow real-world motion, timing, framing, and emotional delivery. By conditioning AI generation on human-led input footage, Ray3 Modify significantly reduces guesswork and enables creators to guide shots closer to their original intent.

Ray3 Modify enables a new class of AI workflows for production, where predictability, continuity, and repeatability are essential. Ray3 Modify preserves original performance while allowing creators to change environments, styling, cinematography, and visual interpretation with intentional, scene-aware fidelity.

“Generative video models are incredibly expressive but also hard to control. Today, we are excited to introduce Ray3 Modify that blends the real-world with the expressivity of AI while giving full control to creatives”, said Amit Jain, CEO and co-founder of Luma AI. “This means creative teams can capture performances with a camera and then immediately modify it to be in any location imaginable, change costumes, or even go back and reshoot the scene with AI, without recreating the physical shoot.”

NEW CAPABILITIES IN RAY3 MODIFY EXTEND RAY3’s LEADERSHIP IN AI PRODUCTION

Ray3 Modify introduces four major advancements designed for real creative pipelines across media, advertising, film VFX, and creative professionals:

Keyframes (Start & End Frames)

Ray3 Modify introduces Start and End Frame control to the video-to-video workflow for the first time. This allows creative teams to guide transitions, control character behavior, and maintain spatial continuity across longer camera movement passes, reveals, and complex scene blocking.

Character Reference

Apply any custom character identity onto an actor’s original performance: a crucial functionality for actor-led projects with AI. For the first time, the feature allows locking the likeness, costume, and identity continuity of a specific character across an entire shot.

Performance Preservation

Ray3 Modify preserves an actor’s original motion, timing, eye line, and emotional delivery as the foundation of the scene, while allowing visual attributes and environments to be intentionally transformed.

Enhanced Modify Video Pipeline

A new high-signal model architecture delivers more reliable adherence to physical motion, composition, and performance. Scenes now change in ways that respect the human-led input footage, enabling intentional edits without destabilizing continuity or identity.

Together, these capabilities establish Ray3 Modify as a next-generation AI video tool purpose-built for hybrid-AI, where creative authority starts with the performer or camera, and the AI extends, interprets, or transforms that direction.

About Luma AI

Luma AI is building multimodal general intelligence that can generate, understand, and operate in the physical world. Its flagship platform, Dream Machine, enables creatives everywhere to generate professional-grade video and images. In 2025, Luma released Ray3, the world’s first reasoning video model capable of creating physically accurate videos, animations, and visuals. Luma’s models are used by leading entertainment studios, advertising agencies, and technology partners worldwide, including Adobe and AWS, and are available via subscription or API. The company is backed by HUMAIN, Andreessen Horowitz, Amazon, AMD Ventures, NVIDIA, Amplify Partners, Matrix Partners, and angels from across technology and entertainment.

Media Contacts

Peter Binazeski

Head of Communications

Luma AI

peter@lumalabs.ai