Creative Intelligence platform for magical AI products

Build and scale creative products with the world's most popular and intuitive Video and Image generation models with Luma API

Find a plan that fits you

Build

Access state of the art image and and video generative features with easy to use end point. Build and iterate video generation for your business, start plans includes:

- Intelligent instruction system

- Text to video, Image to Video

- Camera Control, Extend, Loop

- Billed via usage credits

- Hyperfast generation time

- You own your inputs and outputs

Scale

Scale your creative AI products with higher rate limits and hands-on support from the Luma team.

- All Build tier capabilities

- Higher rate limits

- Hyperfast generation time

- Onboarding support

- Ongoing engineering support

- Billed via monthly invoices

- You own your inputs and outputs

Luma Ray2

Video Model Capabilities

Production Ready

Frontier text to Video Model

Ray2 marks the beginning of a new generation of video models capable of producing fast coherent motion, ultra-realistic details, and logical event sequences. This increases the success rate of usable generations and makes videos generated by Ray2 substantially more production-ready.

Natural Motion

Generate action-packed shots from simple text. From high-speed car chases to dynamic human action, Ray2 brings a whole new level of motion fidelity. Smooth, cinematic, and jaw-dropping—transform your vision into reality.

Storytelling

Tell your story with stunning, cinematic visuals. Ray2 lets you craft breathtaking scenes with precise camera movements—sweeping panoramas, intimate close-ups, and dynamic tracking shots—all from text.

Content Creation

Elevate your content creation toolkit with Ray2. Whether for product promos, service showcases, or storytelling, generate high-quality video clips in seconds. Engage audiences, boost brand appeal, and create with ease.

Visual Effects

Incorporate visual effects with efficiency. Ray2 helps generate pre-visualizations, realistic backgrounds, and initial VFX sequences—all without extensive location shoots. Cut costs, keep quality.

Luma Photon

Image Model Capabilities

State of the art creative output from realism to stylistic

Luma Photon delivers industry-specific visual excellence, crafting images that align perfectly with professional standards - not just generic AI art. From marketing to creative design, each generation is purposefully tailored to your industry's unique requirements. Learn more

State of the art prompt following and text generation

Luma Photon sets a new standard in visual precision. Its remarkable understanding of your prompts ensures every element - colors, compositions, and even text - is generated with unprecedented accuracy, exactly as you envision.

| Resolution/Model | Stable Diffusion 3.5 | Ideogram | Midjourney | Flux 1.1 | Luma Photon |

|---|---|---|---|---|---|

| 1080p | N/A | ¢ 6.0 | ¢ 5.0 | ¢ 6.0 | ¢ 1.6 |

| 1080p fast | N/A | N/A | N/A | N/A | ¢ 0.4 |

| 720p (coming soon) | ¢ 6.5 | N/A | N/A | ¢ 5.0 | ¢ 0.8 |

| 720p fast (coming soon) | ¢ 4.0 | N/A | N/A | ¢ 2.5 | ¢ 0.2 |

800%

Faster & Cheaper

Luma Image Model is built on our unique universal fusion model

architecture (same as our video model) and brings

groundbreaking efficiency through our research, without ever

compromising quality.

Character reference

Generate consistent character variations from a single reference photo. Luma Photon maintains precise facial features and identity across different poses, expressions, and scenarios.

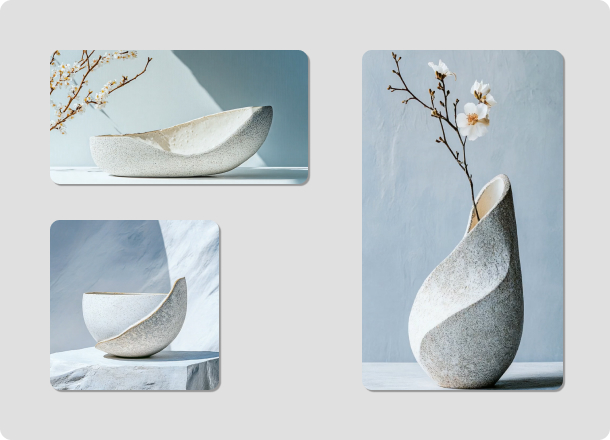

Visual reference

Apply and blend style references with exact control. Luma Photon captures the essence of each reference image, letting you combine distinct visual elements while maintaining professional quality.

Luma Ray1.6

Video Model Capabilities

Text to Video

Ray and Photon's intuitive text instruction means users do not need to learn prompt-engineering. This means you can build generative products that reach new markets.

Image to Video

Build workflows that take static images and instantly create magical high quality animations. Instruct Ray in natural language to create narratives.

Keyframe

Control the narrative that Ray generates with start and end image keyframes…

Extend

…and extend these narratives into stories. All without any pixel editing complexity in your apps.

Loop

Create seamless loops for engaging UIs, product marketing, and backgrounds.

Camera Control

Ground-breaking generative camera allows even your most inexperienced users to get videos looking just right with simple text instructions.

Variable Aspect Ratio

Your app can now produce content perfect for various platforms without complex video and image editing UIs.

Cutting edge image and video model at your hand

A new creative intelligence to partner with people and help them make better things

Read why we are building this platform →

Video is the carrier of culture, ideas, and connections around the world. Video is a universal language. Unlike text, creating video is a physical process and editing high dimensional video data is very difficult. This has meant that those few with the means of production create and most of us just passively consume.They tell the stories and we all just listen.

Video can be the most effective medium of visual storytelling.

Video — if made as universally manipulable as text — can be the most effective medium of thought.

To make this happen we have been training Ray, a family of generative AI models capable of producing and manipulating video. We have seen explosive adoption of the Ray v1, far more than we ever anticipated and in ways we never expected. This means we should go faster.

The Luma API

We are launching the Luma API in beta today with the latest family of Ray v1.6 models. It includes high quality text-to-video, image-to-video, video extension, loop creation, as well as Luma's groundbreaking camera control capabilities.

Through Luma's research and engineering we aim to bring about the age of abundance in visual exploration and creation so more ideas can be tried, better narratives can be built, and diverse stories can be told by those who never could before. We are pricing the API for such a future at mere cents per video and will work to build even better efficiencies. This is just the start.

You start by buying credits that are consumed as you use the API. For higher tiers of usage or for enterprises, please reach out to us so we can scale the service to fit your needs. Inputs you provide and the outputs you generate are not used in training unless you explicitly ask us to do so.

Creative intelligence in your products

To build intelligence that can keep pace with humans, we are working on models that fuse video, images, audio, 3d and language in pre-training. Basically, similar to how the human brain learns and works. With such rich context these models exhibit understanding of causality and follow user intent like intelligent partners.

Building with the Luma API gives you access to this creative intelligence and helps you bring value to markets by accomplishing previously impossible things.

We are excited to learn and grow with you!

Moderation Controls and Responsible Use

Video is a powerful and persuasive medium, and to prevent misuse, we have developed a multi-layered moderation system that combines AI filters with human oversight. Our API allows you to tailor this system to match the preferences of your market and users. With ongoing feedback and learning, we continuously refine our approach to ensure our models and products comply with legal standards and are used constructively.

Enterprise Terms of Service